std::bodun::blog

PhD student at University of Texas at Austin 🤘. Doing systems for ML.

马上订阅 std::bodun::blog RSS 更新: https://www.bodunhu.com/blog/index.xml

Dive into TensorIR

TensorIR is a compiler abstraction for optimizing programs with tensor computation primitives in TVM. Imagine a DNN task as a graph, where each node represents a tensor computation. TensorIR explains how each node/tensor computation primitive in the graph is carried out. This post explains my attempt to implement 2D convolution using TensorIR. It is derived from the Machine Learning Compilation course offered by Tianqi Chen.

Implement 2D Convolution

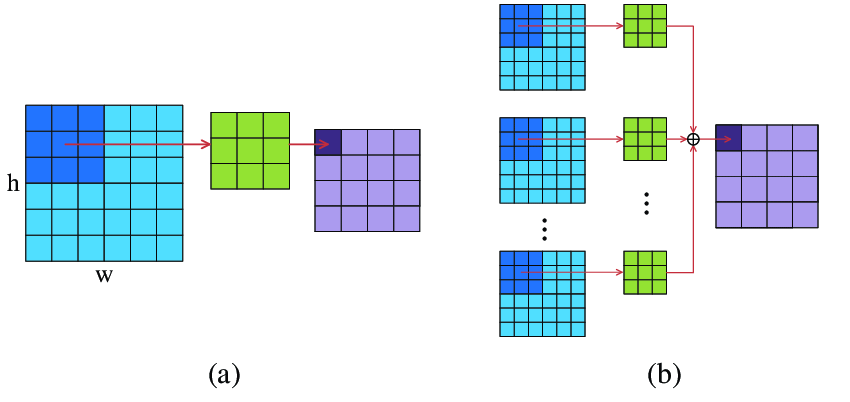

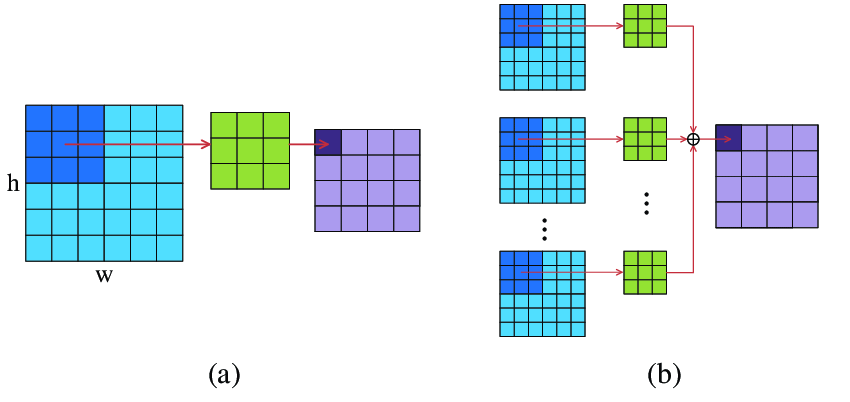

2D convolution is a common operation in image processing. The image below captures how 2D convolution operates. I won’t go into details here. But you can find plenty information online regarding convolution.

First, we initialize both the input matrix and the weight matrix:

# batch, input_channel_dim, image_height, image_width, output_channel_dim, kernel_width & height

N, CI, H, W, CO, K = 1, 1, 8, 8, 2, 3

# output_height, output_width, assuming kernel has stride=1 and padding=0

OUT_H, OUT_W = H - K + 1, W - K + 1

data = np.arange(...剩余内容已隐藏

std::bodun::blog

PhD student at University of Texas at Austin 🤘. Doing systems for ML.

马上订阅 std::bodun::blog RSS 更新: https://www.bodunhu.com/blog/index.xml

Dive into TensorIR

TensorIR is a compiler abstraction for optimizing programs with tensor computation primitives in TVM. Imagine a DNN task as a graph, where each node represents a tensor computation. TensorIR explains how each node/tensor computation primitive in the graph is carried out. This post explains my attempt to implement 2D convolution using TensorIR. It is derived from the Machine Learning Compilation course offered by Tianqi Chen.

Implement 2D Convolution

2D convolution is a common operation in image processing. The image below captures how 2D convolution operates. I won’t go into details here. But you can find plenty information online regarding convolution.

First, we initialize both the input matrix and the weight matrix:

# batch, input_channel_dim, image_height, image_width, output_channel_dim, kernel_width & height

N, CI, H, W, CO, K = 1, 1, 8, 8, 2, 3

# output_height, output_width, assuming kernel has stride=1 and padding=0

OUT_H, OUT_W = H - K + 1, W - K + 1

data = np.arange(...剩余内容已隐藏